Thousands of ChatGPT user accounts are at risk

According to research published by Group-IB, ChatGPT user accounts may not be as secure as you think, as information on more than 100,000 ChatGPT user accounts is vulnerable and traded on the dark web.

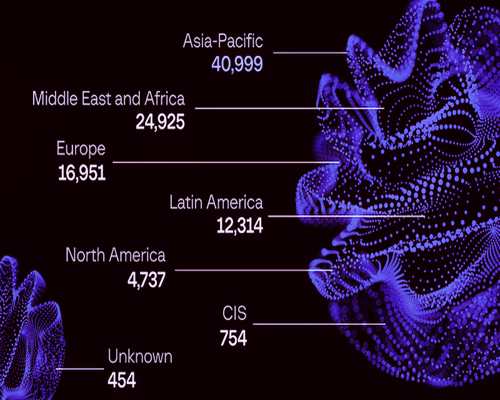

Group-IB is an international cyber security group based in Singapore. Group-IB found 101,134 stealer-infected devices with ChatGPT credentials.

The report found that between June 2022 and May 2023, China and India accounted for the highest number (40.5 percent) of ChatGPT accounts stolen by malware in the Asia-Pacific region. Other regions significantly affected by these attacks include the United States, Vietnam, Brazil, and Egypt.

The company’s threat intelligence platform found evidence of these vulnerabilities in the logs of data-stealing malware being traded on dark web marketplaces. The number of affected accounts reached 26,802 in May 2023, creating an alarming situation for users.

Group-IB pointed out that the widespread use of ChatGPT in business communication and software development means sharing sensitive information on this platform. This makes the platform a suitable target for obtaining illegal benefits. As Group-IB added, ChatGPT accounts have gained significant recognition in various underground communities.

The company analyzed these communities and found that most ChatGPT user accounts were stolen by a data theft hacker named Raccoon. Along with Raccoon, malware such as Vidar and Redline have the largest number of compromised hosts with access to ChatGPT.

Data theft hackers are a type of malware that collects browser credentials, bank card details, cryptocurrency wallet information, cookies, browsing history and other information from browsers installed on infected computers and then sends all this data to the malware operator. . Since this type of malware works indiscriminately, it affects a large number of computers to collect the maximum amount of data.

Dmitry Shestakov, head of threat intelligence at Group-IB, explained this dire situation: “Many companies have replaced ChatGPT in their workflow. Employees enter classified correspondence or use bots to optimize proprietary code. The standard configuration of ChatGPT secures all conversations, which in case threat actors obtain account credentials inadvertently giving them sensitive information. At Group-IB, we constantly monitor underground communities to identify these types of accounts early.”

To reduce the effects of such cyber attacks, experts suggest that users enter a two-step verification code before logging in to access their ChatGPT accounts. This, of course, makes the login process longer, but it is an important way to increase the security of the user account.